If you really want to hear about it, the first thing on work day morning you’ll probably want to know how your last day’s work performed. Well I was lucky this morning hearing a big applause for winning yet another hackathon at client location. In hackathon you build something useful in 8-24 hrs . Facebook’s like button was built in one of the hackathon.

I chose a topic to showcase “how a right choice of technology and programming style can increase the application throughput exponentially“. First question came to my mind was how would I demonstrate that something I built is better, I realize that I need something for comparison. I should build something using traditional way, measure the throughput and then build exactly same thing the I way I think is better and compare their results. That’s it, My instinct told me this is the way to go 🙂

I built an application to mock OAUTH2.0 response of Facebook. I kept it very simple, one validation to check if access token exist in a request and then flush the dummy response. The source code is here on github.

I then built a same functionality using “play & scala”, get source code here. I chose “play” because it uses Netty- non blocking server at backend which I thought will boost my throughput further along with style of programming (async & non-blocking) I want to showcase. “play” also allows hot-swapping (changing & testing code without restart). And “scala” because it is easy to write concise and asynchronous non-blocking code with it and I thought it will also shed some light on practical explanation of my previous blog- multicore crisis.

I then wrote JMeter test script to put a load on both applications. Of this script I first ran “facebook_imperative” test which puts load on traditional web application deployed on tomcat which has 300 threads configured in server.xml. I used 400 JMeter threads to shoot up concurrent request for one second. Following is the result of test. As expected application throughput is around 544 request per second with 3+% error rate. The errors are because tomcat could not handle these many requests and some of them got timeout. The error is obviously something which I would want to avoid because it compromises application reliability.

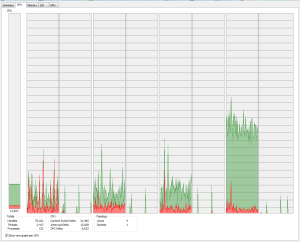

I wrote exactly same functionality in play & scala and ran the similar test “facebookmock_sync” of above script. The execution context for this was also using exactly 300 threads (check FacebookMock.facebookSync & config.ConfigBlocking.executionContext). The JMeter used 400 threads to shoot up requests.

Now compare the results. There are no errors in “play & scala” app and the throughput is 6000+ requests per second ✌. That means 12 times increased throughput. If I can take some liberty to attach some meaning to it then it means I can serve 12 times more customers with same infrastructure and with much quicker response time (look at Average). It also means I need much smaller tech ops teams and I will have more happy customers with lesser expense/investment.

I decided to take this further and thought of adding some real simulation. Not all our calls are non-blocking like this mock response. Some of them may be blocking calls e.g. JDBC calls. I simulated this by putting delay of 1 second using Thread.sleep before responding. That’s the way we usually write code just block the thread and wait for the result:( .

I ran the blocking test “facebookmock_blocking_Imperative” on traditional application. For this I have same application deployed on tomcat but now “doGet” method was using blocking method of mock response. The similar test “facebookmock_async” ran on play app.

As you can see in above figures, the error rate(18%) in traditional app is unacceptable. So throughput shown in figure ‘traditional_blocking_test’ is false. Where as there are still no errors in play app. The difference in coding is; I released the server thread as early as I can using Future & Promises. In fact I tried blocking test even with 1000 JMeter threads and traditional apps gives 50% error rate whereas play app still survives with 0% error.

The best part is here. I converted the blocking call into non-blocking using Scheduler. I used only 1 thread of a pool this time. Check Scheduler.scala & Config.scala. And wow! play app still works with just one thread and even little better results. That’s really awesome!

I always believed that programming is an art and one should handle it with extreme delicacy & respect to craft out marvelous peace which you want world to admire. This hackathon provided me one more opportunity to follow and showcase it.